1 Second Department of Neurology, AHEPA General Hospital, Aristotle University of Thessaloniki, 54634 Thessaloniki, Greece

2 Department of Histology-Embryology, School of Medicine, Aristotle University of Thessaloniki, 54124 Thessaloniki, Greece

Abstract

It is becoming increasingly evident that Artificial intelligence (AI) development draws inspiration from the architecture and functions of the human brain. This manuscript examines the alignment between key brain regions—such as the brainstem, sensory cortices, basal ganglia, thalamus, limbic system, and prefrontal cortex—and AI paradigms, including generic AI, machine learning, deep learning, and artificial general intelligence (AGI). By mapping these neural and computational architectures, I herein highlight how AI models progressively mimic the brain’s complexity, from basic pattern recognition and association to advanced reasoning. Current challenges, such as overcoming learning limitations and achieving comparable neuroplasticity, are addressed alongside emerging innovations like neuromorphic computing. Given the rapid pace of AI advancements in recent years, this work underscores the importance of continuously reassessing our understanding as technology evolves exponentially.

Keywords

- human brain

- artificial neural networks

- artificial intelligence

- deep learning

- neuromorphic computing

The human embryonic brain begins forming just three weeks after fertilization as an oval-shaped disk of tissue known as the neural plate. This structure folds and fuses into the neural tube, where the neuroepithelium initiates neurogenesis, leading to rapid neuron proliferation. By the early second month, this rudimentary structure’s rostral part differentiates into three, then five enlargements-vesicles, while the caudal part elongates to form the spinal cord. During the second trimester, glial cells proliferate, and millions of nerves branch out throughout the body. The fetus begins to show synapses, leading to tiny, often undetected movements, typically sensed by the mother around eighteen weeks. The already developed brainstem, responsible for vital functions like heart rate, breathing, and blood pressure, enables extremely premature babies to potentially survive outside the womb. By the last trimester, around 28 weeks, nerve cells begin to be covered in myelin sheath, facilitating rapid electrical impulse transmission and marked by increased reflex movements. The cerebellum experiences the fastest growth, and the baby’s activity significantly increases. As the brain triples in weight, the cerebrum develops deeper grooves, yet babies are born with a primitive cerebral cortex, explaining much of their emotional and cognitive maturation postnatally [1].

In the first years of life, an infant’s brain undergoes significant strengthening, with an increase in white matter volume [2] that surpasses that found in chimpanzees [3] while forming new neural connections—a process that continues to be refined until around age 25. This ongoing adaptability, known as neuroplasticity, is the brain’s ability to reorganize its connections in response to experiences and environmental stimuli, a concept first experimentally demonstrated in the early 1960s by Diamond MC and others [4]. Neuroplasticity involves not only neurons but also glial cells, particularly microglia. Interestingly, although the human brain contains approximately 86 billion neurons [5], brain size alone doesn’t dictate intelligence. For example, elephants have large brains but lack sophisticated problem-solving abilities, while bees, with much fewer neurons, display complex behaviors. Greater neuroplasticity is linked to higher intelligence and regenerative capacity. A striking example is the flatworm planaria, which can fully regenerate their nervous system including a two-lobe brain but also the whole body, exhibiting almost sheer immortality [6]. While genes were traditionally viewed as the primary drivers of organ development, recent research suggests that bioelectric signals, biomechanical triggers, training, and non-neural memory also play crucial roles in instructing this adaptation [7].

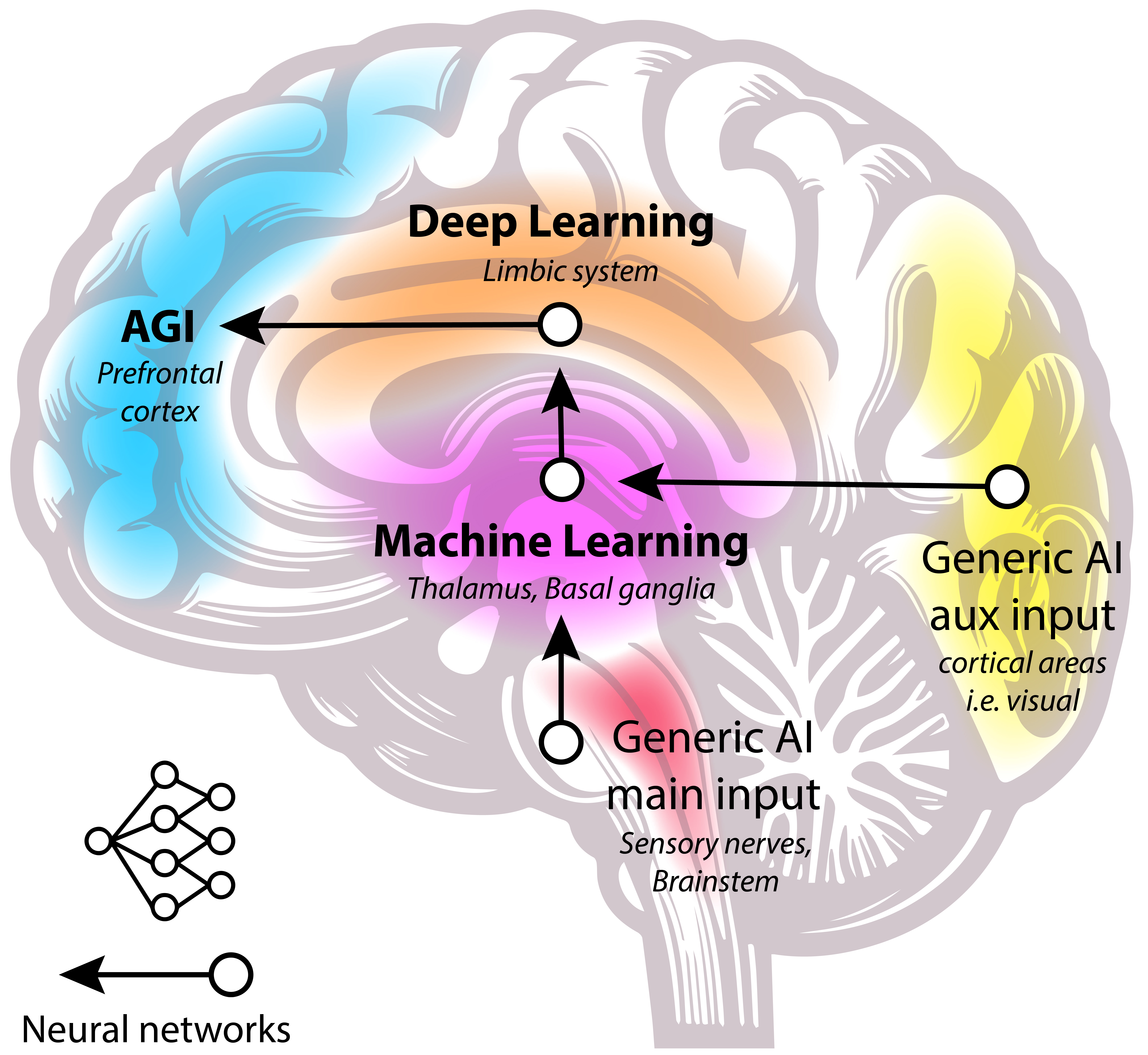

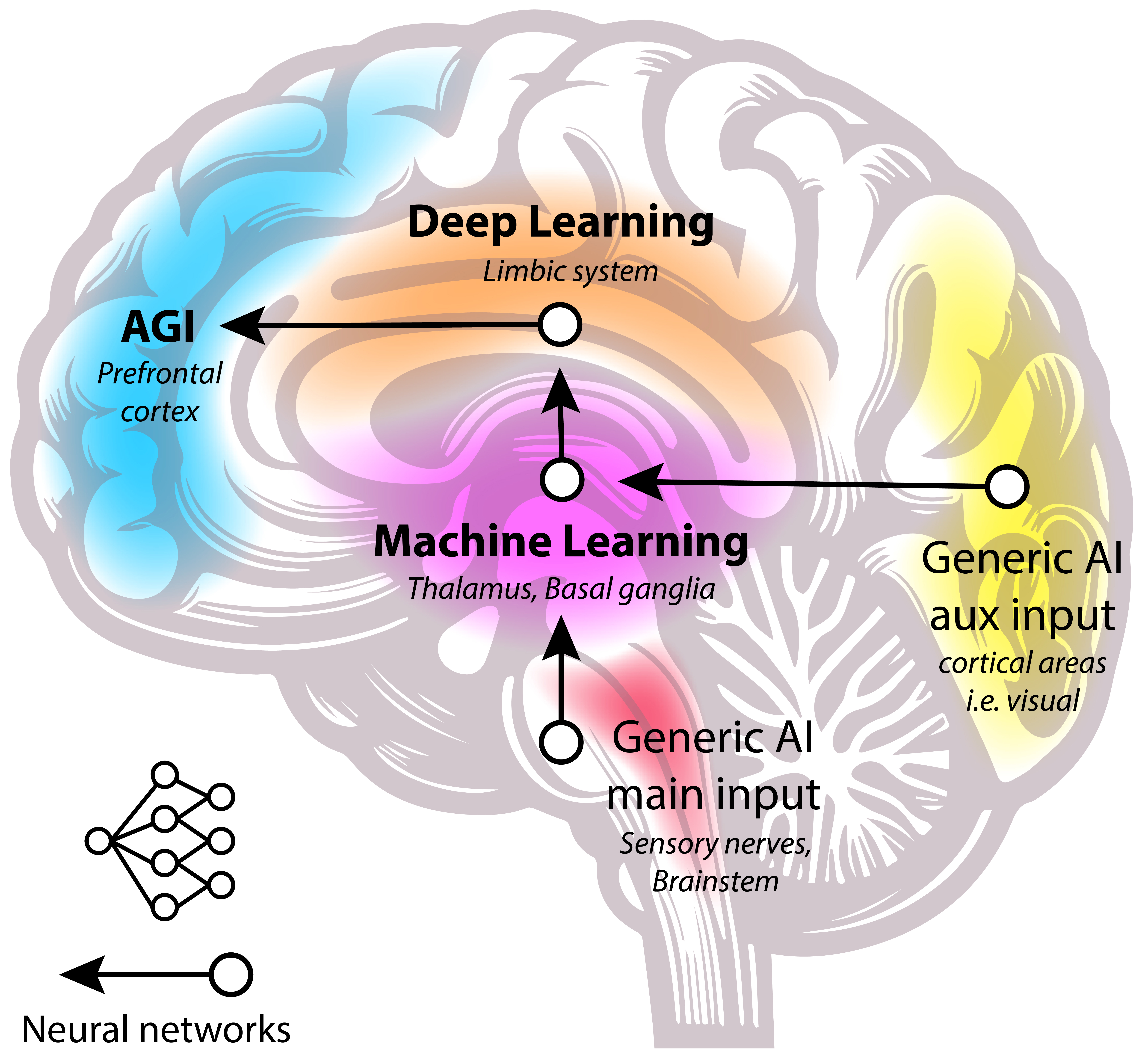

Since ancient times, philosophers like Plato and later Descartes sought to understand the nature of human consciousness and thought. In the 1960s, neuroscientist Paul MacLean introduced a conceptual model to explain how different parts of the brain might govern various functions. Although outdated, his model provided a framework for linking specific brain structures to different cognitive functions such as instincts, emotions, and rational thought. However, contemporary neuroscience reveals that mental activities such as primal instincts, emotional regulation, and logical reasoning do not reside in separate, distinct brain regions. Instead, these functions are the result of complex interactions across multiple areas of the brain [8]. The brain’s cognitive functions are organized within large-scale networks that span various critical brain regions (Fig. 1). The visual cortex processes sensory information, particularly visual data, forming the foundation of perceptual awareness. The basal ganglia and thalamus integrate and relay sensory and motor signals, with the basal ganglia’s striatum regulating movement and reward, and the thalamus acting as a sensory hub. The hippocampus and cingulate cortex are central to memory and cognitive control, with the hippocampus managing memory and the cingulate cortex (both anterior and posterior) overseeing emotional regulation and cognitive processing. These regions operate within major networks: the default mode network (DMN) integrates self-referential thought and memory, the frontoparietal network (FPN) supports executive functions and attention, and the salience network (SN) detects salient stimuli and maintains cognitive control. Lastly, at the forefront of executive functions and complex decision-making is the prefrontal cortex (PFC), particularly the medial PFC (mPFC), which integrates information across these networks to manage high-level cognitive processes.

Fig. 1.

Fig. 1.

Brain mimicking artificial intelligence (AI). The simplified figure demonstrates the parallels between key neural regions and AI concepts, starting with the main input, which converges afferent stimuli from the periphery. These stimuli are part of proprioception and include pure sensory cranial nerves, mixed nerves primarily located in the brainstem (red), along with parasympathetic projections from the autonomic nervous system. Auxiliary inputs arise from various cortical regions within the CNS, such as the visual cortex (yellow), somatosensory cortex, and cerebellar cortex. Altogether, these regions handle basic sensory processing, analogous to generic AI systems that recognize patterns and process inputs at a fundamental level. Machine Learning can be corralated to the basal ganglia and thalamus (magenta), regions critical for integrating sensory information and adaptive decision-making. Deep Learning resonates fittingly with with the limbic system, including the hippocampus, amygdala, and cingulate cortex (orange), which collectively manage complex data processing, memory, and emotional regulation within neural networks like the DMN and SN. Lastly, artificial general intelligence (AGI) is associated with the prefrontal cortex (cyan), particularly the mPFC, which oversee advanced reasoning and executive functions, reflecting the capabilities envisioned for AGI systems. The figure emphasizes the influence of neuron-level biology on AI neural networks and suggests a focus on how insights from brain circuitry inform AI design. CNS, central nervous system; DMN, default mode network; SN, salience network; mPFC, medial PFC. Created with Adobe Illustrator (Version 29.3.1, Adobe, Waltham, MA, USA).

The earliest encephalization began in simple organisms like flatworms around 500 million years ago, driven by the need to coordinate nerve cells around the gut to meet energy demands. True brains emerged later as organisms developed hunting and predation skills, necessitating the coordination of motor functions for more efficient hunting and enhanced sensory input to avoid predation. About 7 million years ago, early hominins appeared, gradually evolving from using simple tools to more complex ones. Approximately 2 million years ago, Homo erectus harnessed fire, invented tools, and developed the first rudimentary cultures. Homo sapiens emerged around 300,000 years ago with even larger and more complex brains, eventually leading to the advent of agriculture and organized civilizations around 3500 B.C. Rapid advancements in medicine began about 200 years ago, and in 1950, foundational concepts for artificial intelligence (AI), such as the Turing Test, were established [9]. The internet age began roughly 35 years ago, accelerating technological progress. The first AI chatbot, ELIZA, was developed in 1964, and by 2022, the world was introduced to Chat Generative Pre-Trained Transformer (ChatGPT), a large language model (LLM) based on a single-threaded neural network. But why neural networks, and how did we advance so quickly to this point?

Neural networks, inspired by the human brain, are AI systems designed to process data and learn autonomously [10]. There are different types of artificial neural networks (ANN), such as multi-layer perceptrons (MLP), promising alternatives known as Kolmogorov-Arnold networks (KAN), convolutional neural networks (CNN), and recurrent neural networks (RNN). The simplest form, a perceptron, mimics a single neuron with one or multiple inputs, a processor, and a single output. While traditional machine learning (ML) models rely on structured data and significant human intervention, deep learning (DL), a subset of ML, allows models to handle unstructured data, like text and images, through more automated and complex learning. ANNs form the core of DL algorithms, enabling systems to adapt to new patterns, often through unsupervised learning. Essentially, AI is the overarching system, with ML as a subset, DL as a further specialization, and ANN as the foundation of these models—akin to a Russian nesting doll (babuska) where each concept contains the next. Interestingly, these AI paradigms can be mapped to brain regions, reflecting increasing complexity and functionality. Generic AI parallels the visual cortex, both handling basic sensory processing and pattern recognition. ML aligns with the basal ganglia and thalamus, where decision-making and adaptive learning occur. DL mirrors the hippocampus and cingulate cortex, with both managing complex data processing, memory, and cognitive control. At the highest level, artificial general intelligence (AGI) corresponds to the prefrontal cortex, the center of advanced reasoning, planning, and executive function (Fig. 1).

Neurons transmit information through chemical and electrical signals at synapses, forming the brain’s intricate wiring, where “neurons that fire together, wire together”. The brain, with over 100 trillion synapses, stores and processes information much like a computer, where the brain matter acts as the hardware and the transmitted information as the software. While conventional software is programmed to perform specific tasks and only changes when updated by humans, AI is designed to learn and improve its performance autonomously. AI systems rely on techniques like backpropagation to refine their models, but they still fall short of the brain’s unmatched ability to adapt and learn from minimal exposure to new information, since brain constantly reshapes itself physically, as new synapses form or resolve with every piece of information processed. Interestingly, third generation of ANN named spiking neural networks (SNN) mimic even more human physiology of neurons and how they asynchronously operate [11]. In order to run better AI, we need better hardware to sustain and feed these advanced algorithms. This raises a pivotal question: should we strive to build silicon-based supercomputers that emulate the brain’s complexity, or should we explore integrating advanced computational systems directly into our neural tissue? As we push the boundaries of both AI and neurotechnology, we glimpse the possibilities and challenges inherent in each trajectory.

Hardware-wise, traditional computers, based on the Von Neumann architecture, separate computing and memory functions, creating a bottleneck that limits efficiency. As transistor sizes shrink to the atomic scale, this design faces increasing challenges, including excessive heat generation and energy consumption. In response, neuromorphic computing—a new approach inspired by the brain’s seamless integration of memory and processing into single neurons, minimizing latency—seeks to overcome these limitations by emulating the dense, interconnected neuron clusters of the neocortex. To grasp the potential of this technology, consider how far we’ve come with supercomputers: From the BRAIN Initiative and the TrueNorth chip from International Business Machines (IBM) Corporation, to Intel’s Loihi SNN chips, we now have Hewlett Packard Enterprise’s Frontier, that can perform over one quintillion operations per second, but it requires 22.7 megawatts of power and occupies 680 square meters. This is a stark contrast to the human brain, which operates on just 20 watts of power within a compact 1500 cm3 space, achieving an extraordinary level of efficiency [12]. Despite these advances, the energy demands and physical size of even the most advanced systems remain significant hurdles—challenges that may be addressed as quantum computing advances [13].

The reverse approach—implanting hardware chips into neural tissue—is also gaining momentum. Technologies such as computed tomography (CT) scans and functional magnetic resonance imaging (fMRI) allow us to map brain networks with unprecedented detail, paving the way for brain-computer interfaces (BCIs) like Neuralink. Additionally, bio-devices and non-invasive techniques like transcranial stimulation are increasingly used to correct neural issues, leveraging our understanding of the brain’s structure. However, research involving human brain tissue raises ethical concerns. To address this, scientists have turned to brain organoids—miniature, lab-grown brains—and other synthetic biological intelligence platforms [14], as ethical alternatives. All in all, AI with its superior pattern recognition, plays a critical role in analyzing various models, helping to sift through noise and improve signal clarity, ultimately refining our understanding of brain development and function. Nevertheless, while multiple selective pressures likely shaped human brain evolution, no single theory fully accounts for our unique brain capacity, a puzzle that may partially relate to the fetal head-down posture in our species. As we explore vast amount of innovations, we stand at the intersection of carbon-based (human) structures, silicon-based (current AI hardware) systems, and the future potential of quantum-based computing, each bringing us closer to blurring the lines between biological and artificial intelligence.

The neural network literature is vast, particularly following the state-of-the-art applications of DL techniques such as backpropagation, CNNs, and RNNs [15]. These advancements have inadvertently highlighted the similarities but also the inconsistencies between brain and AI information processing [16]. While ANN have made significant strides, they still face substantial limitations compared to biological systems, particularly in areas such as lifelong learning and memory retention. A key issue is catastrophic interference, also known as catastrophic forgetting, where AI models struggle to retain previously learned information when new data is introduced [17]. Unlike the brain, which employs mechanisms like synaptic plasticity to consolidate important memories and prune irrelevant ones, AI systems overwrite previously learned information when encountering new information. A recent study on replay mechanisms, inspired by biological systems, suggest potential solutions, but these methods remain underdeveloped in AI, lacking the robustness observed in natural learning [18]. Lastly, AI networks lack the dynamic interplay of top-down and bottom-up control seen in the brain. While the human brain dynamically integrates sensory inputs and higher-order decision-making in a bidirectional manner, current AI architectures remain largely unidirectional, limiting their adaptability and responsiveness to complex environments.

This opinion article explored the parallels between brain regions (i.e., from sensory integration in the thalamus and basal ganglia to complex decision-making in the prefrontal cortex) and the progression of artificial systems, from elemental AI handling inputs like text, images, and speech to the integration of ML and DL algorithms driving advancements toward AGI. SNNs and neuromorphic computing aim to emulate brain neuroplasticity, enabling dynamic memory mechanisms that could mitigate catastrophic forgetting, while overcoming challenges related to volume, space, and velocity in neural processing. These technologies also hold promise for introducing top-down and bottom-up control within AI systems, mirroring the hierarchical and bidirectional processing found in the brain. More specifically, SNNs could facilitate more efficient memory consolidation, whereas neuromorphic computing may enhance adaptability by integrating high-level goals with sensory processing. While these analogies reveal valuable insights, they also expose critical limitations. Constructing systems that approach human intelligence, remains an existentially precarious endeavor, demanding careful forecast and mitigation of its societal impacts. Despite these challenges, I posit that integrating neuroscience-inspired mechanisms into AI, paired with the implementation of robust regulations to ensure safety and ethical alignment, will not only bridge existing gaps but also unlock transformative potential, advancing both scientific discovery and the future of human-centric technology.

The single author PT was responsible for the conception of ideas presented, writing, and the entire preparation of this manuscript. The author read and approved the final manuscript. The author has participated sufficiently in the work and agreed to be accountable for all aspects of the work.

Not applicable.

Not applicable.

This research received no external funding.

The author declares no conflict of interest.

References

Publisher’s Note: IMR Press stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.